CNNs vs. GANs: Understanding Key Differences in Deep Learning

Deep learning has revolutionized numerous fields by providing powerful tools for data analysis and pattern recognition. Among these tools, Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) stand out for their unique capabilities and applications. Understanding the distinctions between these two architectures is crucial for leveraging their strengths in various tasks. This article delves into the key differences between CNNs and GANs, highlighting their purposes, structures, functionalities, training processes, applications, outputs, and key techniques.

Overview of Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are a class of deep learning models primarily used for image-processing tasks. They are designed to automatically and adaptively learn spatial hierarchies of features from input images. CNNs have been instrumental in advancing the field of computer vision and have found applications in image and video recognition, object detection, and image segmentation.

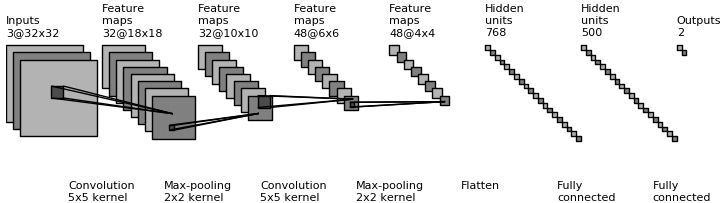

Structure of CNNs:

- Convolutional Layers: These layers apply convolution operations to the input, extracting features such as edges, textures, and patterns.

- Pooling Layers: These layers reduce the spatial dimensions of the feature maps, aiding in computational efficiency and reducing the risk of over-fitting.

- Fully Connected Layers: After several convolutional and pooling layers, fully connected layers combine the features to classify the input image into categories.

Functionality of CNNs:

CNNs work by applying filters to the input image, which slide across the image to detect specific features. The network learns which features are important through training, and adjusting the filters to minimize errors in predictions.

Overview of Generative Adversarial Networks (GANs)

Generative Adversarial Networks (GANs) are a type of deep learning model used for generating new data samples that are similar to a given dataset. Introduced by Ian Good fellow and his colleagues in 2014, GANs consist of two neural networks, the Generator and the Discriminator, which are trained simultaneously through a process of adversarial learning.

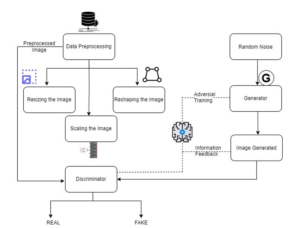

Structure of GANs:

- Generator: The Generator creates fake data samples from random noise, attempting to mimic the real data distribution.

- Discriminator: The Discriminator evaluates whether the input data is real (from the training dataset) or fake (produced by the Generator).

Functionality of GANs:

GANs operate through a competitive process where the Generator tries to fool the Discriminator with fake data, while the Discriminator strives to correctly identify real versus fake data. This adversarial training helps the Generator improve its ability to create realistic data over time.

Key Differences Between CNNs and GANs

|

Aspect |

Convolutional Neural Networks (CNNs) |

Generative Adversarial Networks (GANs) |

|

Purpose |

Image recognition and classification |

Data generation |

|

Structure |

Convolutional, pooling, fully connected layers |

Generator and Discriminator |

|

Functionality |

Feature extraction from images |

Adversarial generation and evaluation of data |

|

Training |

Supervised learning with labeled data |

Adversarial learning with Generator and Discriminator |

|

Applications |

Image/video recognition, object detection |

Image synthesis, style transfer |

|

Output |

Class probabilities, feature maps |

New, synthetic data samples |

|

Techniques |

Convolution, pooling, ReLU activation |

Adversarial loss, latent space exploration |

Example Models

Example CNN Models

- LeNet-5:

- Developed By: Yann LeCun and colleagues

- Purpose: Handwritten digit recognition

- Structure: Consists of two sets of convolutional and pooling layers, followed by three fully connected layers.

- Applications: Used primarily for digit recognition in the MNIST dataset.

- AlexNet:

- Developed By: Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton

- Purpose: Image classification

- Structure: Includes five convolutional layers, some followed by max-pooling layers, and three fully connected layers.

- Applications: Achieved state-of-the-art performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) 2012.

- Ref Link: AlexNet

- ResNet (Residual Network):

- Developed By: Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun

- Purpose: Image classification, object detection

- Structure: Features residual blocks with skip connections to allow for the training of very deep networks.

- Applications: Used in various computer vision tasks, including image classification and object detection.

- Ref Link: ResNet

Example GAN Models

- DCGAN (Deep Convolutional GAN):

- Developed By: Alec Radford, Luke Metz, and Soumith Chintala

- Purpose: Image generation

- Structure: Utilizes convolutional layers in both the Generator and Discriminator instead of fully connected layers.

- Applications: Generates high-quality images from random noise.

- CycleGAN:

- Developed By: Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A. Efros

- Purpose: Image-to-image translation without paired data

- Structure: Employs two Generators and two Discriminators to learn mappings between two domains.

- Applications: Used for tasks such as translating photos into paintings and vice versa.

- StyleGAN:

- Developed By: NVIDIA researchers

- Purpose: High-quality image generation

- Structure: Introduces style transfer capabilities by adjusting the Generator’s architecture to control different levels of image features.

Applications: Known for generating extremely realistic human faces.

Conclusion

Both Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) are pivotal in the field of deep learning, each serving distinct purposes and excelling in different applications. CNNs are the backbone of image analysis tasks, while GANs excel in data generation and creative applications. By understanding their unique structures, functionalities, and training processes, practitioners can harness the power of these architectures to drive innovation and achieve breakthroughs in various domains.